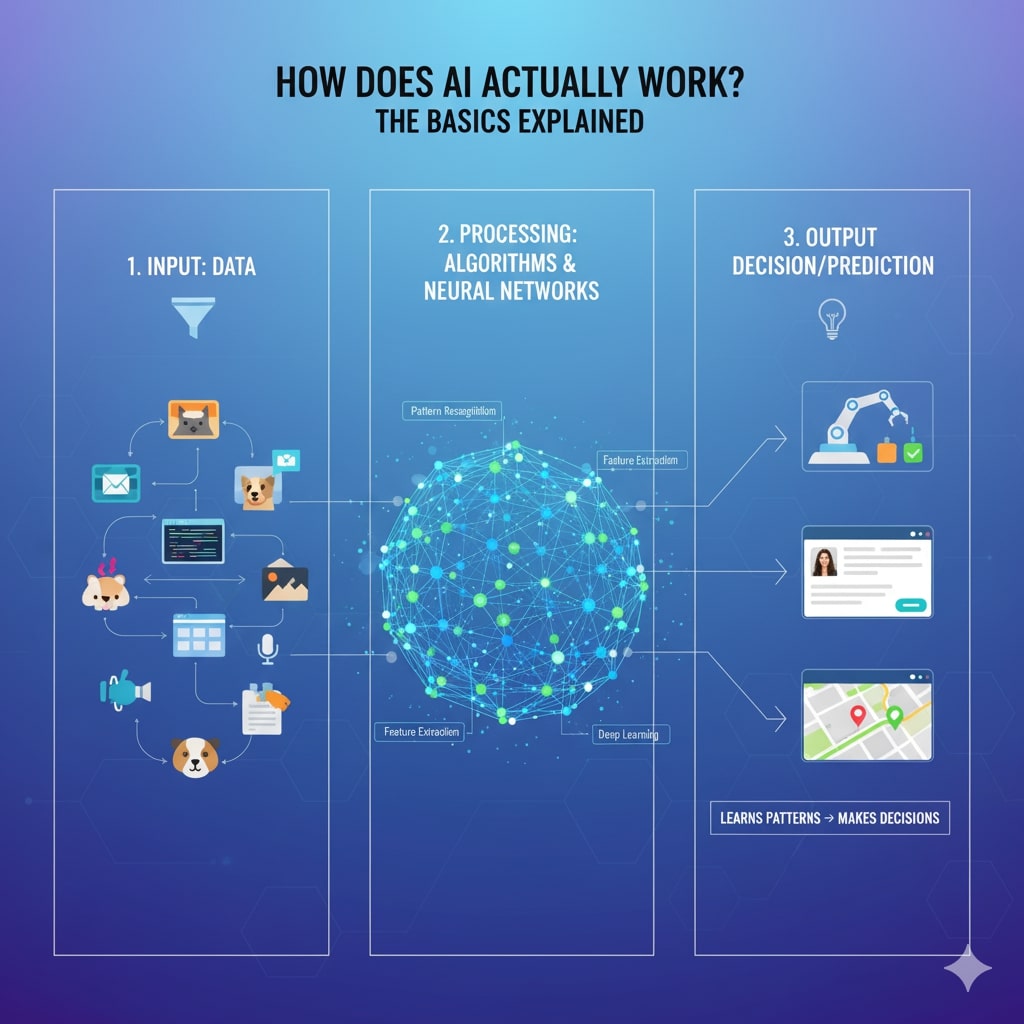

The Conceptual Foundation: What AI Really Is

Artificial Intelligence (AI) is a term that often conjures images of sentient robots or dystopian futures. However, in its current and most prevalent form, AI is much less science fiction and much more advanced mathematics. In essence, AI is the capability of a machine to simulate human intelligence, performing tasks such as learning, reasoning, problem-solving, perception, and decision-making. To put it simply, AI isn’t about creating a brain; it’s about creating a powerful tool that uses logic and data to make predictions or take actions.

The field is vast, but it is primarily underpinned by Machine Learning (ML). Specifically, Machine Learning is a subset of AI that focuses on building systems that can learn from data without being explicitly programmed. Consequently, the machine improves its performance on a specific task over time through experience. Therefore, when people talk about the AI revolution, they are almost always referring to the rapid advancements in machine learning and its deeper, more complex cousin, Deep Learning.

Data: The Essential Fuel for the AI Engine

First and foremost, every AI system begins with data. Data is the fundamental ingredient—the “fuel”—that powers the entire learning process. Furthermore, without massive, high-quality, and relevant data, an AI model is useless.

The Role of Datasets

AI models rely on large datasets, which can include images, text, numbers, sound clips, or video. For instance, if you want an AI to identify cats, you must feed it millions of pictures of cats and label them as such. Conversely, if you train an AI model to detect fraud, you feed it millions of transaction records, labeled as “legitimate” or “fraudulent.”

This data must go through a critical preparation phase:

- Cleaning: Initially, raw data is often messy, containing errors, missing values, or irrelevant information. Thus, the data must be cleaned and preprocessed to ensure consistency.

- Structuring: Next, the data is organized into a format the algorithm can understand. Typically, this involves converting real-world concepts (like a picture) into mathematical vectors or arrays.

- Labeling: In many cases, especially with supervised learning, a human must manually label the data. Clearly, a correctly labeled dataset is crucial for the model to learn the correct patterns.

Machine Learning: The Core Learning Algorithms

The actual “learning” happens through algorithms—sets of instructions and mathematical formulas—that process the data. As a result, the algorithm builds a model, which is essentially the mathematical representation of the patterns it has discovered in the training data. There are three primary types of machine learning that dictate how the model learns.

1. Supervised Learning

On the one hand, Supervised Learning is the most common approach. In this method, the AI is trained on data that is already labeled. In other words, the model is given the input and the correct output.

- Process: The algorithm uses the labeled data to map the input to the output. Specifically, it is like a student learning with flashcards. It sees a picture of a dog (input) and is told it’s a “Dog” (label/output). If it guesses wrong, the algorithm adjusts its internal parameters (weights) to get closer to the correct answer next time.

- Applications: Consequently, this type of learning is used for tasks like image classification, email spam filtering, and predicting house prices (regression).

2. Unsupervised Learning

On the other hand, Unsupervised Learning involves training the AI on unlabeled data. Therefore, the algorithm must find hidden patterns and intrinsic structures within the data on its own.

- Process: The model clusters or groups data points based on their similarities without any prior guidance. For example, it might group customers with similar purchasing habits, even though no one told the model which groups to look for.

- Applications: Hence, it is primarily used for market segmentation, anomaly detection (like finding unusual transactions), and data compression.

3. Reinforcement Learning

Moreover, Reinforcement Learning (RL) operates differently, resembling how an animal is trained. Essentially, an AI agent learns to perform a task by interacting with an environment through trial and error.

- Process: The agent receives a reward for taking a desirable action and a penalty for an undesirable one. In time, it learns a policy—a strategy—that maximizes its total reward. Crucially, there are no fixed labels; the learning is driven entirely by the feedback loop.

- Applications: Therefore, RL excels in complex environments such as training robotic movement, autonomous vehicles, and creating AI for playing games like chess or Go.

Deep Learning: The Power of Neural Networks

Furthermore, the revolution in AI has been largely driven by Deep Learning, a specialized subset of Machine Learning. Ultimately, Deep Learning models utilize Artificial Neural Networks (ANNs), which are computational systems inspired by the structure of the human brain.

The Artificial Neuron

At the most basic level, the fundamental building block is the neuron (or perceptron).

- Input: First, a neuron receives multiple inputs, which could be the pixels of an image or the outputs of other neurons.

- Weights and Biases: Next, each input is multiplied by a weight, which represents the importance or influence of that input. A bias value is then added. Thus, the weights are the parameters the AI learns and adjusts during training.

- Activation Function: Finally, the weighted sum passes through an activation function, which decides whether the neuron should “fire” or pass its output to the next layer.

The Network Structure

In contrast to simple neural networks, deep learning networks have multiple hidden layers between the input and output layers. Consequently, this depth allows the network to learn increasingly complex and abstract representations of the data.

- Input Layer: To begin with, this layer receives the raw data (e.g., the pixel values of an image).

- Hidden Layers (The “Deep” Part): Subsequently, these layers perform the majority of the complex calculations. For example, in an image network, the first hidden layer might detect simple edges, the next might combine them to find shapes, and the third might assemble the shapes into high-level features like eyes or wheels.

- Output Layer: Ultimately, this layer provides the final result, such as the classification (“Dog,” “Cat,” “Car”) or a generated piece of text.

The Learning Process: Backpropagation

So, how does a Deep Learning network actually learn? The process is called backpropagation, and it is one of the most critical breakthroughs in AI.

- Forward Pass: First, the input data is fed forward through the network, layer by layer, until it produces an output (a prediction).

- Calculating Loss: Then, the predicted output is compared to the correct answer (the ground truth/label) to calculate the loss or error.

- Backward Pass (Backpropagation): Finally, this error is propagated backward through the network. Specifically, the algorithm uses calculus (a technique called gradient descent) to determine how much each individual weight contributed to the overall error. Therefore, it calculates the “gradient” of the loss function with respect to every weight.

- Weight Adjustment: In conclusion, the system slightly adjusts all the weights in a direction that reduces the error for the next attempt.

Consequently, the network repeats this cycle millions or billions of times across its vast dataset. Over time, the weights are fine-tuned to a point where the network can accurately map complex inputs to correct outputs, even for data it has never seen before.

Modern AI: Large Language Models and Generative AI

Moving on to the present day, the AI that currently dominates headlines is Generative AI and its core technology, the Large Language Model (LLM).

The Transformer Architecture

LLMs (like ChatGPT or Gemini) are a type of deep learning model that use a specific architecture called the Transformer. Essentially, the Transformer allows the model to process sequences of data (like words in a sentence) and focus on the most relevant parts of the sequence. This capability is enabled by a mechanism known as Self-Attention.

- Self-Attention: In brief, Self-Attention allows the model to weigh the importance of all other words in the input sequence when processing any single word. For instance, in the sentence “The animal didn’t cross the street because it was too tired,” the word “it” is linked to “animal” and not “street.” Thus, the attention mechanism automatically learns this relationship.

Generating Content

Furthermore, after an LLM is trained on a massive corpus of text (the entire internet, in some cases), it becomes a highly sophisticated prediction engine.

- Prediction: Specifically, when you type a prompt, the LLM processes the words and then predicts the most statistically probable next word in the sequence.

- Iteration: Subsequently, it adds that predicted word to the sequence and repeats the process, predicting the word after that.

- Creation: Ultimately, by repeating this loop hundreds or thousands of times, the model generates long, coherent, and seemingly creative output—be it an email, an article, or computer code.

Conclusion: A Continuous Evolution

In summation, AI isn’t a single magical entity; rather, it is an ecosystem of computational models, mathematical algorithms, and an immense volume of data. The fundamental principle remains: machines learn from data, identify patterns, and adjust their internal parameters to improve their ability to make predictions or decisions. Therefore, as the volume of data grows and computing power increases, consequently, AI models will continue to evolve and become even more sophisticated, fundamentally reshaping how we interact with technology and the world around us.

For a great visual explanation of these concepts, you should watch this video: SIMPLEST Explanation of How Artificial Intelligence Works? No Jargon | What is AI? How AI works?. This video is relevant because it promises a “simplest explanation” of how AI works, which aligns perfectly with the article’s goal of explaining the basics.

One thought on “How Does AI Actually Work? The Basics Explained”