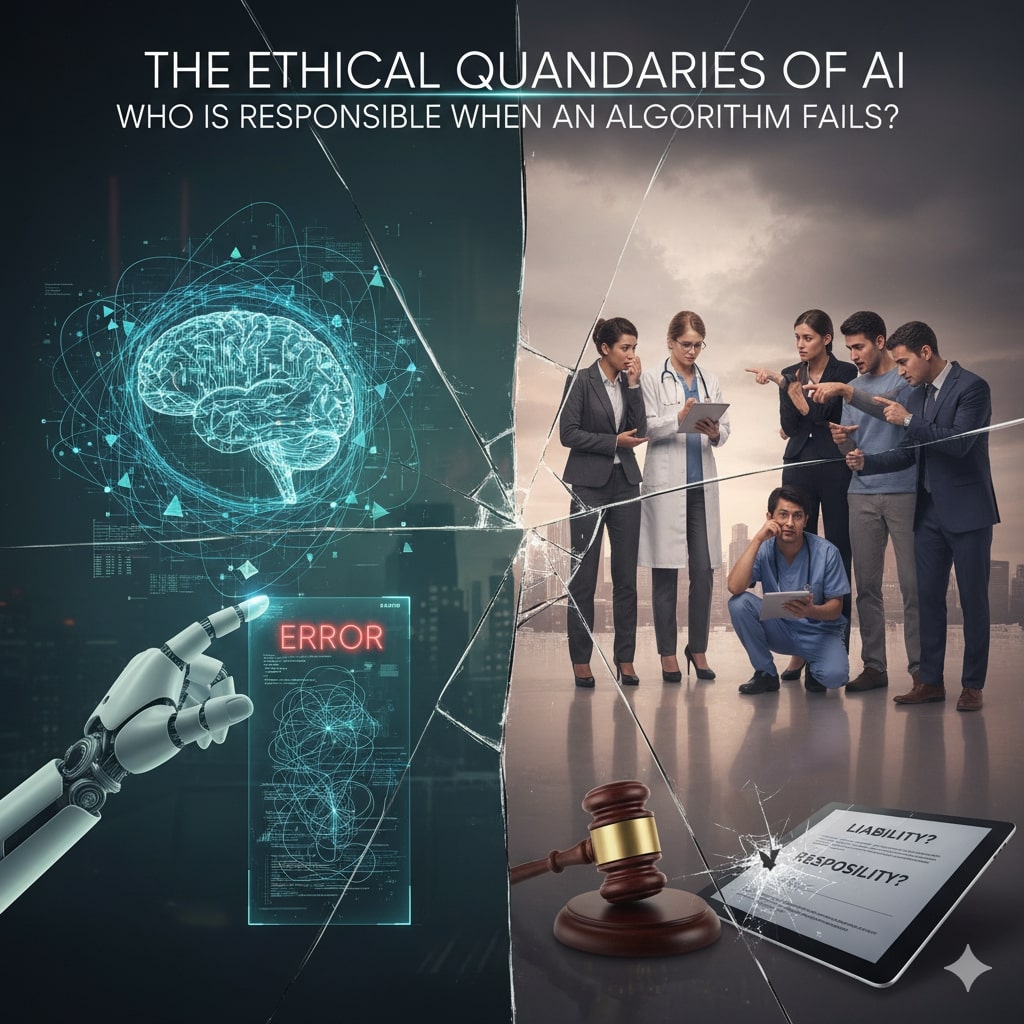

Artificial Intelligence (AI) has become an integral part of modern society, impacting areas from healthcare to finance, law enforcement to entertainment. As algorithms increasingly make decisions with minimal human intervention, they promise efficiency, accuracy and innovation. However, this growing reliance also raises a critical question: when an AI algorithm fails, resulting in harm or injustice, who is responsible?

This article explores the ethical dilemmas surrounding AI failures, examines current accountability frameworks and suggests ways society can approach responsibility in the age of intelligent machines.

The Rise of AI and Its Expanding Influence

Over the past decade, AI technologies have evolved rapidly. Machine learning models can analyze vast datasets to identify patterns, make predictions and even generate content. Consequently, AI now powers applications such as autonomous vehicles, medical diagnosis tools, credit scoring systems and criminal risk assessments.

Nevertheless, despite these advancements, AI is far from infallible. Algorithms can malfunction, produce biased outcomes, or misinterpret data. For instance, if a self-driving car misjudges a traffic situation and causes an accident, or if a job recruitment AI unfairly filters out candidates from certain demographics, the consequences are serious. This reality forces us to grapple with fundamental questions about accountability and ethics.

Understanding Algorithmic Failures

Before addressing responsibility, it is essential to understand what constitutes an AI failure. Algorithmic failure occurs when an AI system’s decision leads to outcomes that deviate significantly from expected or ethical standards. These failures may stem from multiple factors, including:

- Data bias: AI relies on training data, which can reflect historical prejudices or incomplete information.

- Design flaws: Faults in the algorithm’s architecture or logic can cause erroneous outputs.

- Unintended consequences: Complex AI may behave unpredictably in unforeseen scenarios.

- User misuse: Incorrect deployment or misunderstanding of AI capabilities can exacerbate failures.

For these reasons, AI failures often result from a combination of technical, social and organizational issues.

Who Can Be Held Responsible?

Attributing responsibility in AI failures is complex due to the involvement of diverse stakeholders. These include:

- Developers and programmers: Responsible for coding, testing and updating AI systems.

- Data providers: Individuals or organizations supplying training data that may be flawed.

- Companies deploying the AI: Firms choosing to implement AI tools and overseeing their use.

- Users: End-users who interact with the AI and may misuse or misinterpret its results.

- Regulators and policymakers: Entities setting standards and legal frameworks for AI use.

Ethical Frameworks for AI Accountability

To navigate AI’s ethical quandaries, several accountability models have emerged:

1. Developer Accountability

Developers bear significant responsibility because they design and program the algorithms. Thus, they must ensure the AI adheres to ethical standards by rigorously testing for biases, errors and unintended harms. They should also be transparent about the AI’s limitations.

However, since AI systems often learn and evolve after deployment, developers might not control all outcomes. Therefore, holding only developers accountable can be unfair.

2. Corporate Responsibility

Companies deploying AI must implement governance processes. This includes conducting ethical impact assessments, monitoring AI performance in real-time and offering recourse mechanisms for harmed individuals.

Corporations hold economic power and influence over AI application, making their responsibility crucial. Still, the complexity of AI systems often means companies depend on third-party developers or data providers, diffusing accountability.

3. User Responsibility

In some cases, errors occur due to user misapplication or overreliance on AI decisions without due diligence. Users, including professionals like doctors or judges using AI tools, must retain critical oversight.

Nonetheless, expecting users to fully understand complex AI may be unrealistic, limiting the effectiveness of user accountability alone.

4. Regulatory Oversight

Governments and regulators play a vital role by establishing legal frameworks that clarify liability, mandate transparency and enforce ethical AI practices.

For example, the European Union’s AI Act proposes strict requirements for high-risk AI applications. Effective regulation can incentivize responsible AI deployment and provide victims with remedies.

Nevertheless, legislation often lags behind technological innovation, making adaptive regulatory models necessary.

Real-World Examples Illustrating Responsibility Challenges

Several incidents highlight the complexity of AI accountability:

- Autonomous Vehicle Accidents: When Tesla’s autopilot system has been involved in crashes, questions arise about whether the manufacturer, software developers, or drivers are responsible. Often, liability is contested in courts with no clear precedent.

- Algorithmic Bias in Hiring: Recruiting AI tools have been criticized for discriminating against women or minorities due to biased training data. Here, both developers and the companies that deploy the tools share accountability.

- Healthcare Misdiagnosis: AI-powered diagnostic tools can improve accuracy, but failures may endanger patients. Responsibility may lie with developers, hospitals, or practitioners depending on the context and oversight.

These cases underline that responsibility is rarely confined to a single party. Instead, a distributed accountability approach may be required.

The Case for Shared Responsibility

Given the multifaceted nature of AI systems, many ethicists advocate for shared responsibility. This approach involves all stakeholders—developers, corporations, users and regulators—collaborating to ensure ethical outcomes.

Shared responsibility implies:

- Transparency: Disclosing AI decision-making processes and limitations.

- Continuous Monitoring: Acting proactively to identify and correct faults.

- Public Engagement: Including impacted communities in AI governance.

- Clear Liability Rules: Defining how responsibilities are distributed legally.

Such a framework can help mitigate blame games and encourage cooperation to improve AI systems continually.

Challenges in Enforcing Accountability

Despite the theoretical appeal of shared responsibility, practical enforcement faces hurdles:

- Opacity of AI Systems: Many AI models, especially deep learning, operate as “black boxes” with decisions that are difficult to explain.

- Global Jurisdiction Issues: AI operates across borders, complicating legal and ethical standards.

- Rapid Innovation: Laws and standards struggle to keep pace with evolving AI technologies.

- Resource Disparities: Smaller companies or countries may lack capacity to implement robust oversight.

Addressing these challenges requires concerted interdisciplinary efforts involving technologists, ethicists, legal experts and policymakers.

Emerging Solutions and Best Practices

To improve accountability when AI fails, several promising solutions have emerged:

- Explainable AI (XAI): Developing models that can clarify how decisions are made aids transparency and user trust.

- Ethics-by-Design: Integrating ethical considerations at each stage of AI development ensures responsible innovation.

- Impact Assessments: Systematic evaluations before deploying AI help detect potential harms early.

- Audit Trails: Keeping comprehensive logs of AI decisions can aid in tracing faults and assigning responsibility.

- Insurance Models: New liability insurance tailored for AI risks can provide financial remedies without lengthy litigation.

Combining these practices with regulatory frameworks creates a more resilient governance ecosystem.

A Call for Ethical AI Culture

Ultimately, responsibility transcends legal and technical measures. It demands cultivating an ethical AI culture where all participants prioritize human welfare, fairness, and accountability.

For instance, companies should foster ethical training for developers and executives. Consumers need digital literacy to understand AI limitations. Governments must engage stakeholders in policymaking, ensuring inclusivity and justice.

Only through such a cultural shift will society harness AI’s benefits while minimizing harms.

Conclusion

AI algorithms significantly impact lives across domains, but their failures provoke difficult ethical questions about who is responsible. Rather than focusing blame on a single actor, responsibility must be shared among developers, companies, users and regulators, supported by transparency, continuous oversight and clear legal guidelines.

As AI continues to evolve, society must proactively address these ethical quandaries. Through collaboration, innovation and ethical commitment, we can ensure AI serves humanity’s best interests while safeguarding fairness and accountability when things go wrong.