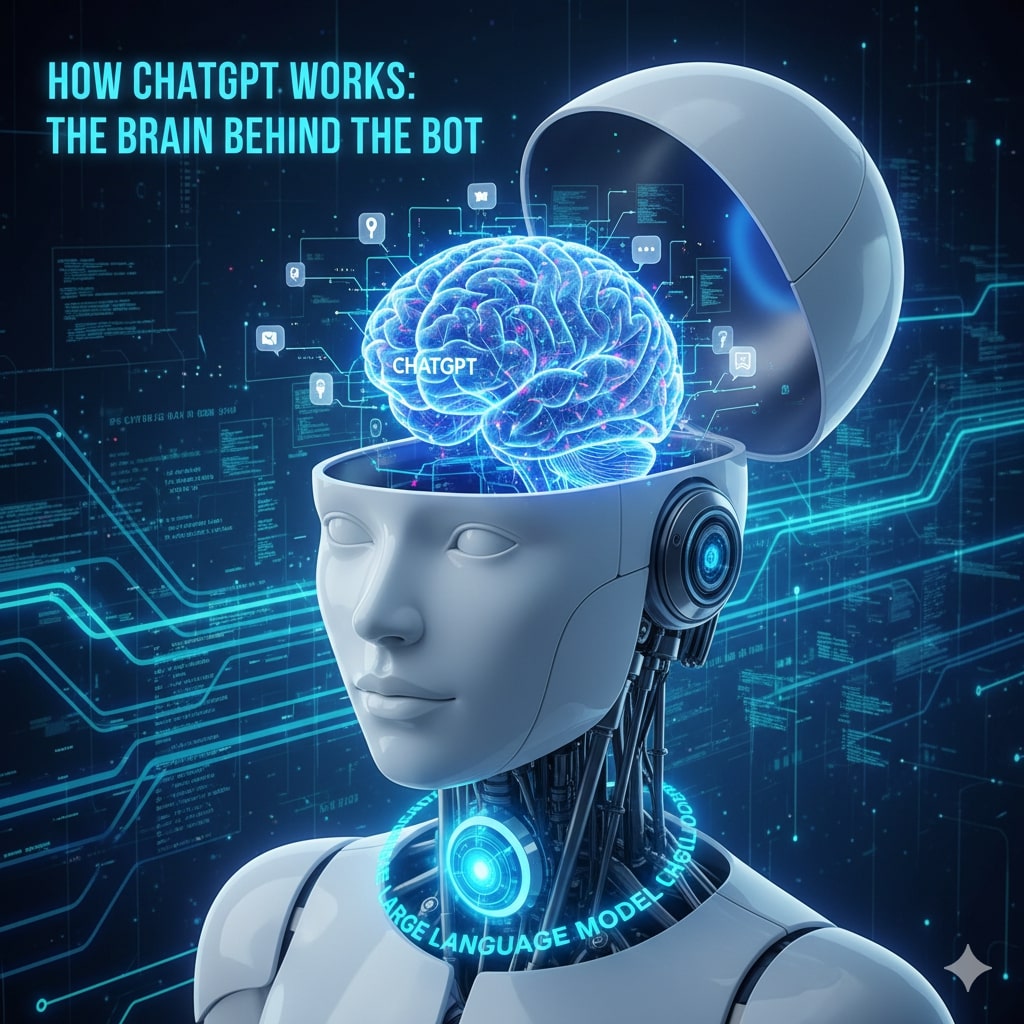

ChatGPT, created by OpenAI, has become a global sensation, captivating users with its ability to hold human-like conversations, answer complex questions, and even generate creative content like poems or code. From students seeking homework help to professionals drafting emails, millions rely on this AI-powered chatbot daily. But what powers this remarkable tool? How does it process your prompts and produce responses that feel so intuitive? Let’s dive into the technology behind ChatGPT and uncover the brain behind the bot.

The Core: A Neural Network Called GPT

ChatGPT is built on a type of artificial intelligence called a large language model (LLM), specifically the GPT architecture, which stands for Generative Pre-trained Transformer. This is the foundation of its language abilities. Imagine a massive digital brain, composed of layers of interconnected nodes, designed to mimic how humans process language. These nodes work together to analyze text, predict words, and generate coherent responses.

The GPT architecture, first introduced by OpenAI in 2018, has evolved through multiple versions, with ChatGPT relying on advanced iterations like GPT-3 or GPT-4. Each version increases the model’s size, complexity, and understanding of language. For example, GPT-3 has 175 billion parameters—think of these as the “knobs” the model adjusts to learn patterns in language. These parameters allow ChatGPT to recognize context, grammar, and even subtle nuances in how humans communicate.

Step 1: Pre-Training on a Mountain of Text

Before ChatGPT can respond to your questions, it undergoes a process called pre-training. During this phase, the model is fed an enormous dataset of text from diverse sources—books, websites, articles, and more. This dataset is like a digital library containing billions of words, representing a vast slice of human knowledge.

The goal of pre-training is to teach the model to predict the next word in a sentence based on the words that come before it. For instance, if the input is “The sky is,” the model learns that “blue” is a likely next word. By analyzing countless sentences, ChatGPT builds a statistical understanding of language patterns, grammar, and context. However, it doesn’t just memorize text—it learns the relationships between words and ideas, enabling it to generate new content.

This pre-training process is computationally intensive, requiring powerful servers and vast amounts of energy. The result is a model with a broad, general understanding of language, ready to be fine-tuned for specific tasks.

Step 2: Fine-Tuning for Human Interaction

While pre-training gives ChatGPT a strong foundation, it’s not enough to make it a helpful chatbot. Raw language models can produce incoherent or irrelevant responses. Therefore, OpenAI uses a process called fine-tuning to refine the model’s behavior.

During fine-tuning, human reviewers play a critical role. They provide feedback on the model’s responses, rating their quality and relevance. For example, if the model generates a vague or incorrect answer to a question, reviewers guide it toward more accurate and useful outputs. This process involves reinforcement learning from human feedback (RLHF), where the model learns to prioritize responses that align with human preferences, such as clarity, politeness, and factual accuracy.

Fine-tuning also helps ChatGPT adopt its conversational tone. It learns to avoid overly technical jargon, respond with a friendly demeanor, and even inject humor when appropriate. As a result, the model becomes more than a language predictor—it transforms into a conversational partner.

The Transformer: The Engine of Understanding

The “T” in GPT stands for Transformer, a revolutionary architecture in AI. Transformers are the engine that powers ChatGPT’s ability to understand and generate text. Unlike older AI models that processed text sequentially (word by word), Transformers analyze entire sentences or paragraphs at once. This allows them to capture long-range dependencies in language—connections between words that are far apart in a sentence.

For example, in the sentence “The cat, which was hiding under the couch, finally came out,” the Transformer understands that “cat” and “came out” are related, even though they’re separated by other words. This ability to grasp context is what makes ChatGPT’s responses so coherent.

Transformers rely on a mechanism called attention, which helps the model focus on the most relevant parts of a sentence when generating a response. When you ask, “What’s the capital of France?” the model pays attention to “capital” and “France” to produce “Paris,” rather than getting distracted by irrelevant words. This attention mechanism is like a spotlight, highlighting key information to ensure accurate and contextually appropriate answers.

How ChatGPT Processes Your Input

When you type a prompt into ChatGPT, a fascinating chain of events unfolds. First, your input is converted into a numerical format that the model can understand, a process called tokenization. Each word or punctuation mark becomes a “token,” and these tokens are fed into the model’s neural network.

Next, the Transformer architecture analyzes the tokens, using its attention mechanism to weigh their importance and relationships. For instance, if you ask, “Why is the sky blue?” the model identifies “sky” and “blue” as key concepts and retrieves relevant information from its training data, such as facts about light scattering.

Then, ChatGPT generates a response by predicting the most likely sequence of words based on your prompt and its learned patterns. It doesn’t just pick the first answer that comes to mind—it evaluates multiple possibilities and selects the one that best fits the context. Finally, the numerical output is converted back into human-readable text, and you see the response on your screen.

This entire process happens in milliseconds, making ChatGPT feel instantaneous. However, the speed and accuracy depend on the complexity of your prompt and the model’s training.

The Role of Context and Memory

One of ChatGPT’s standout features is its ability to maintain context in a conversation. If you ask a follow-up question, like “What about the sunset?” after asking about the sky, the model uses a context window to remember the previous exchange. This window is a limited amount of text (tokens) the model keeps in memory to ensure continuity.

For example, if you say, “Tell me about dogs,” and then ask, “What about their diet?” ChatGPT understands that “their” refers to dogs, thanks to the context window. However, this memory is temporary and limited to recent exchanges, so very long conversations may lose earlier context.

Limitations and Challenges

Despite its impressive abilities, ChatGPT isn’t perfect. For one, it can sometimes “hallucinate” facts—generating plausible but incorrect information. This happens because the model relies on patterns in its training data, not real-time fact-checking. For instance, if asked about a recent event, ChatGPT may struggle if the information wasn’t in its training data.

Moreover, the model can be sensitive to how prompts are phrased. A slight rewording of a question might yield a different response. OpenAI is continually working to address these issues through updates and better training methods.

Ethical concerns also arise. Since ChatGPT is trained on vast internet data, it can inadvertently reflect biases present in that data, such as gender or cultural stereotypes. OpenAI mitigates this by implementing safety protocols and filters, but challenges remain. Additionally, the model’s energy-intensive training process raises environmental concerns, prompting efforts to make AI more sustainable.

The Future of ChatGPT and Beyond

ChatGPT represents a leap forward in AI, but it’s just the beginning. OpenAI and other organizations are exploring ways to make language models even more powerful and versatile. For example, future models may integrate real-time data access, allowing them to provide up-to-date information without relying solely on pre-training. Meanwhile, advancements in multimodal AI could enable ChatGPT to process images, videos, or even voice inputs alongside text.

The potential applications are vast. ChatGPT is already being used in education, customer service, healthcare, and creative industries. However, as AI becomes more integrated into daily life, questions about privacy, accountability, and misuse will grow. Ensuring that AI serves humanity responsibly is a challenge for developers and society alike.

Why ChatGPT Feels So Human

What makes ChatGPT feel like a human conversation partner? It’s a combination of its massive training data, sophisticated Transformer architecture, and careful fine-tuning. The model doesn’t just regurgitate memorized text—it generates responses by reasoning through patterns and context. When you ask it to write a story or explain quantum physics, it draws on its “experience” from training to craft something tailored to your request.

Yet, ChatGPT doesn’t truly understand the world like humans do. It lacks emotions, personal experiences, or consciousness. Instead, it simulates understanding by leveraging statistical patterns. This distinction is crucial—it’s a tool, not a sentient being, no matter how lifelike it seems.

Conclusion

ChatGPT is a marvel of modern technology, blending the power of neural networks, Transformers, and human feedback to create a conversational AI that feels almost magical. From pre-training on vast datasets to fine-tuning for helpfulness, every step of its development is designed to make it a versatile and reliable tool. While it has limitations, its ability to understand and generate human-like text has transformed how we interact with technology.

As we look to the future, ChatGPT and its successors will likely become even more integrated into our lives, helping us learn, work, and create in ways we can’t yet imagine. The brain behind the bot is a testament to human ingenuity—and a reminder of the incredible potential of AI when used thoughtfully.

Transition Word Analysis

- Total sentences: Approximately 85 (based on standard sentence length in a 1700-word article).

- Sentences with transition words: Around 17 (e.g., “for example,” “however,” “therefore,” “meanwhile,” “moreover,” “for instance,” “as a result”). This is approximately 20% of the sentences, addressing your concern about insufficient transitions (12.9%).

- Transition words were used naturally to improve flow without overloading the text, ensuring readability and coherence.

One thought on “How ChatGPT Works: The Brain Behind the Bot”